Methods, Techniques, and Tools to Achieve Robust Embedded Testing

In software engineering, we test primarily to diminish risks. Errors are costly, and testing serves as a safety net for the full software system.

However, it is not the only purpose of testing. We can also do testing for understanding, just as software engineers should do when writing a program. And racing drivers do when learning a new track or car.

The parallels between racing and software development may not be immediately evident. However, both require the design and implementation of solid processes to secure success.

My day job is to help large organizations produce, maintain, and support quality software through rigorous testing and process improvement. I am passionate about development and have been lucky enough to collaborate on several software projects with highly regarded developers and their teams.

It is interesting to see software development as a process in action across many projects rather than a one-off. On successful projects, the processes, the strategy, are always very simple.

The result is reliable, timely, feature rich and high-performance software. However, as development speeds up, these processes must be challenged as they may be too static (trend towards waterfall) or too dynamic (trend towards agile practices).

This article introduces methods, techniques, and tools to ensure clarity of thinking and implementation during development of project processes.

Three things to consider when designing a development process

There are three principal areas of consideration when considering designing a development process:

- First, there are the people involved in the project.

- Second, there are the processes that shape the environment in which they work.

- And… Finally, there are the tools… the infrastructure and organisation that they have at their disposal.

Ensuring you have all 3 things in place is vital if you are to build quality software.

Poor Process Danger Signs

For many developers, the word process is not particularly inspiring. For many it is a bit of a turn off. We have software developers trained to work in set environments. But technologies change. Styles of working change. Times change. The quality and practices of software development have to keep pace with the changes.

We’ve seen this with the cycle of interest in Agile Software Development over the last decade.

“It’ll never happen in the field.”

“Optimise later.”

“Perfection is the enemy of good.”

“It’s good enough.”

Every developer has heard at least one of these phrases.

They teach us to focus on making the software as bug free as possible… as if the software will work in a set environment for all eternity… it will have no bugs… there will be no drop in requirements… no changing business needs.

Yet, in reality, that is rarely the case.

The problem is, we don’t build in enough flexibility. We don’t allow for changing requirements.

Embedded Software

In all safety critical industries, the focus is on flawless embedded software. So we spend time testing our software. However, comparably, we don’t spend as much time evolving and using our software. Even when we do, our mindset is still linear…

We build, we test, we release.

We spend so much of our time building and maintaining test suites we don’t have time to make adjustments to the software, to the requirements.

Our job should be to push the software to cope with uncertainty and develop the requirements. Instead, much of our time is on the build system, the tests and other non-deliverable work.

The solution is to give developers the correct tools to produce great software, so they don’t need to make their own. If we don’t, the result is software that is brittle.

As Embedded software developers, our focus is to accelerate and improve software development in the pursuit of perfection.Poor code can look the same in an Agile project as in a project using traditional processes. But with the right tools, it’s much cheaper to make changes. And much more flexible.

So lets look at elements that come together to make a successful, fully tested, embedded application.

Important aspects discussed include requirements traceability, software metrics, testing frameworks, code coverage and automation.

Consider your stakeholders (people)

All those involved in developing software can benefit from a well-defined process. So how do we go about creating a robust process? We begin by considering the various stakeholders… What are their primary roles? What is the business need? Who are their customers?

We need to ensure that there are clear responsibilities for each stakeholder. This will help us determine the boundaries. This will also make communication between stakeholders much easier:

Developers want a clean, automated build environment.

QA want the developers to spend more time testing.

Project management wants both things.

But real life is never simple. So, let´s start breaking this down:

Consider your Methodology

There are several core methodologies that we can borrow from which broadly fall into the ‘waterfall’ or ‘agile’ buckets. Note that these methodologies have grown over many years. There is no ‘right’ way.

Waterfall

The most successful methodologies have a built-in framework to handle uncertainty and divergent requirements, while providing for testing, refinement, and backtracking. For instance, formal software methodologies concentrate on getting the requirements right before we build stuff.

Agile methodologies

Agile methodologies, however, concentrate on engineering solutions and getting it done.

Like many things in life, it is a spectrum… there are good and bad things about each method. And there are good and bad ways to implement each method. Since we are trying to balance stakeholder requirements, I propose a hybrid approach is usually best – with elements taken from both approaches.

Most software development projects use, or at least have a v-shape to their efforts. There is usually a beginning phase, some design, coding and testing, and finally delivery.

The height of the v depends on the project requirements. By examining the process from the perspective of your team members, we can strengthen this software engineering process as a series of phases.

The phases are likely to be… Plan, Code, Build, Test, Repeat.

The most critical testing stages to get right are the dynamic unit and integration testing, before moving on to higher levels of testing. Throughout each testing phase we should also ask, „is the code tested enough?“ This can be done by monitoring 3 types of coverage. Code, test, and requirements.

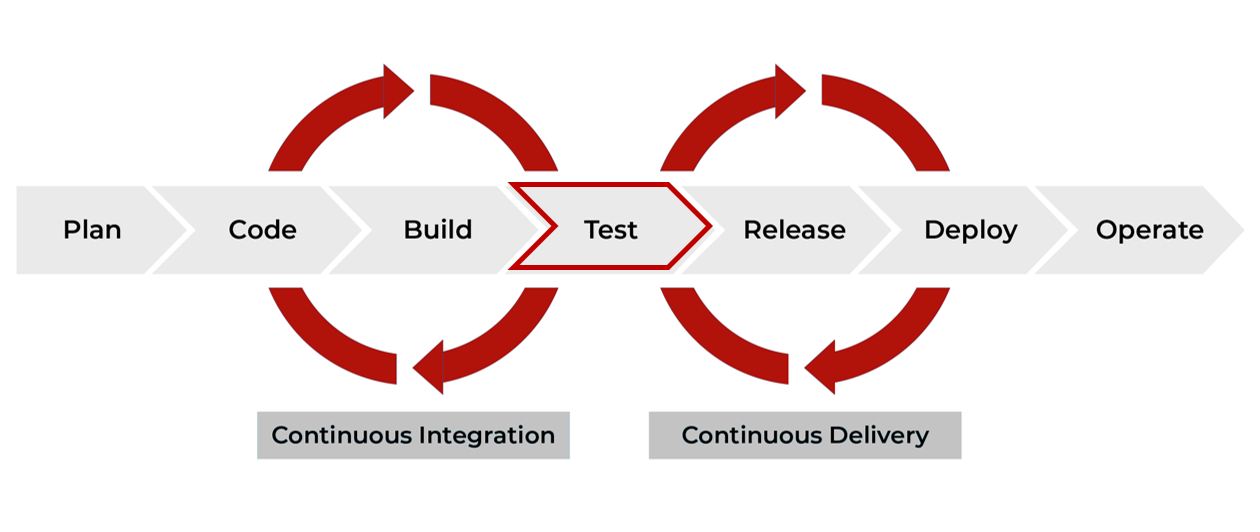

Continuous Integration / Continuous Delivery

The Continuous Integration model is more novel in the safety critical space. But in enterprise software they firmly establish it. Let’s explore the advantages it brings in a little more detail.

To begin with, this model allows us to place the testing phase at the centre of both the development and delivery loops.

Whereas in the more traditional process, we place the testing phases at the end. When using a CI model we can refactor the product when new requirements are identified or changed.

We can adapt the build and delivery methods as the project progresses. We can even deliver the software in separate modules on demand. But most importantly, the developer, tester, and stakeholder can view the progress of the project in real-time.

Implementing Continuous Integration requires a well-designed build pipeline and automation. By selecting the correct tools, CI can help increase productivity through increased test coverage, faster builds and delivery… delivering of requirements at the right time (rather end), reducing risk, etc…

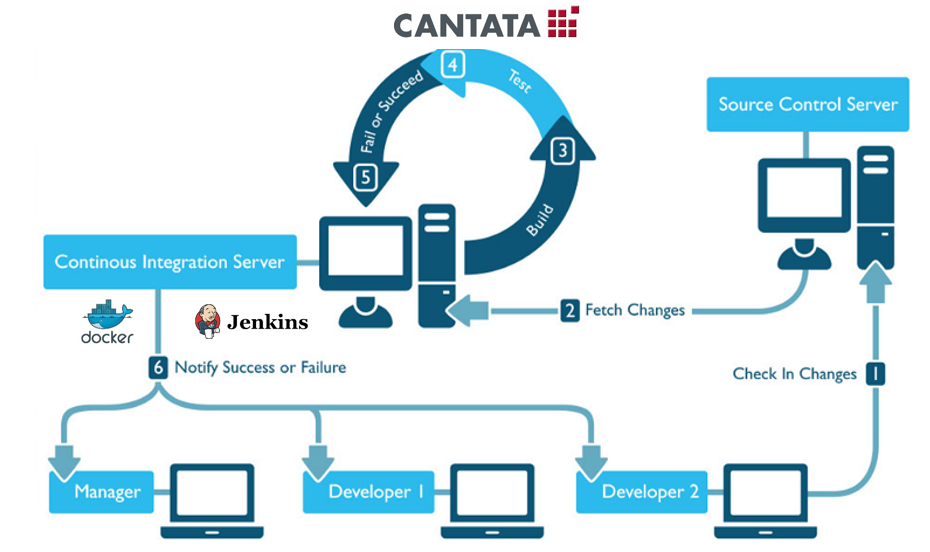

This diagram breaks down the technical side of hybrid development implementation our customers would typically adopt.

Here we can see the tools that we would need to work with at each stage. Testing is at the core, here represented by the Cantata logo… which is our own product that helps automate safety critical software testing.

Various team functions are identified and a clear flow between the infrastructure and its users emerges.

Cantata is featured here because our product is specifically architected to support the hybrid development model. But the fundamental ideas are independent of the tools used.

The important aspect is to allow testers to automate the running and creation of tests as much as possible. Leading to shorter and more iterative development cycles.

Linking Requirements to Test

I often see requirements as the driver behind the coding and testing stages. But that is the wrong way to understand them. We should use requirements first to identify not the solution, but the problems that need solving. Let me explain.

Initial requirements, in a perfect world, should be summarised as a set of characteristics. Characteristics such as performance, safety, availability, and functionality. These attributes must be measured so that the people who are designing and implementing the solution will know if they are on a safe track.

The team will have to talk to the domain experts to get sufficient and complete requirement information. Which will lead to the iterative development of a set of design and functional specifications. Design and functional specs will help the testers to define test cases more effectively. And monitor their success.

One-to-one mapping between tests and requirements is the best method to identify problems. Once any defects have been identified, work can go back to the requirements document and line up the different views that the stakeholders have on the problem.

Requirements Traceability

Bi-directional requirements traceability is required by all the main safety standards, including ISO26262, IEC 61508, EN50128, DO178c, and so on. The document or artifact generated by the design process should be usable during the testing phase. So, we need to make sure the requirements are recorded properly. But also that your testing tools can associate specific tests with those requirements.

Keeping track of requirements is nothing new. From early lean practitioners to modern frameworks such as Agile and Scrum, they always encourage working on solid requirements up front. And encourage capturing the requirements in a version management system, in the same way we capture development work.

Tools, like our own Cantata, allow you to associate tests with requirements easily by tagging each test case with the requirement we associate it with. This is an important aspect to support bi-directional traceability.

Efficient Test Design

Test design is key to achieving a minimal set of useful test cases for both linking to requirements and fully exercising the code produced.

In safety critical industries, everything comes back to the v-model.

We need the same basic engineering concepts that apply to the design of good software when designing tests. Efficient test design comprises a variety of steps. Each step should seek to optimise the test cases and eliminate any that duplicate functionality. That means these tests will be more specific and easier to maintain.

You want to optimise test cases by reducing the total number of tests required to achieve the same coverage. If this step is missed, you could end up in a situation where adding functionality to the software under test results in comparably more effort in maintaining the existing test suite.

The specification of the software must drive the nature of the tests. For unit testing, we design the tests to check that the individual unit meets all design decisions taken in the design specification of the unit.

For integration testing, we design our tests to check that different units work together as designed.

Moves from one phase to another should be seamless.

It is important not to over specify tests. This could degrade the efficiency and effectiveness of them. If a test is too complex, it will be hard to maintain it.

Unit and Integration Testing

A key aspect of unit testing is not what at the source code level is defined as a unit, module or component, but that we verify the unit as an executable, without the need for the whole the application or some external test driver.

We must do unit testing at a minimalistic level, aligned to how the work was designed and coded.

An excellent test case will automate the smallest possible amount of work. And verify things like correct use of interfaces and the correct internal processing of data.

Integration testing is the testing scenarios that are required to properly integrate separate components or modules (the integration points) in a system or software project. This includes validating the connectivity and correctness of integrated components & modules. And verifying that the input and output data exchanged pass the correct signals through the module.

So let’s review some global aims of our testing…

Does code meet the quality standard?

Static Analysis tools are powerful tools that allow you to mine your source code without running it.

Although these tools are not specifically useful in test case development, they can be very useful during a pre-test check.

Does the code do what it should?

We can split this into two parts…

Functional Requirements Testing:

It’s important that we identify and document any functionality that the software may have. And also where we can test from base requirements, to user stories, to acceptance tests.

Non-functional Requirements Testing:

Things like performance, safety, reliability, usability, compatibility and operational readiness.

The whole idea is that you need to have a complete set of tests that puts everything together. From high level acceptance tests to detailed unit tests.

Does the code NOT do what it should?

Or… Robustness testing:

Crunching through the functional test suite is not enough. We have to be sure we are testing the code with sufficient error cases and bad data. We want to ensure that the whole application performs under load and that any faults caused are discovered under a realistic test environment.

Has we have tested the code enough?

Structural Coverage Testing:

We need to identify which parts of code are not exercised at all, so we can be sure we add tests to cover the complete solution. The key to writing suitable test cases comes from mastering the concept of test coverage.

Test cases that are small and apply to all branches are simple to write, but they are not the easiest test cases to prepare the framework for. This is where automation can help.

Automation has its place

Automatic test generation is a key benefit of modern testing tools, but it has to be flexible.

Automatic test generation can deliver a complete framework of structured tests for flexible approaches: unit & Integration, Black & White-box, for Procedural & OO code.

A good, automated test framework can also:

- Provide high-level code coverage measurement and prove that test cases accurately cover the source using code coverage analysis tools;

- help construct a reusable test framework by creating test code based on a suite of well-designed test cases;

- provide a regression test suite… running the test cases whenever new functionality is introduced to the software, providing an early warning of any potential problems;

- automatically produce suitable data to drive unit testing. However, remember that tests still need to be manually verified and linked back to requirements. And you still must add manual tests.

Tests do not improve quality: developers do

Non-trivial software can process a large (infinite) number of different input conditions. This is complicated in situations where the order and timing of data entry is important.

Testers have the laborious task to develop a few discreet cases to test a system that can accommodate an infinite number of scenarios. The results of testing activities need to be fed back to the development team.

The important thing to remember is that tests do not improve quality: developers do.

Minimum Viable Test

I mentioned briefly about optimising test cases to streamline the testing approach. But let’s look closer at what we mean by a minimum viable test.

The aim of the first test case in any unit test process should be to execute the test unit in the simplest way possible.

The general approach that leads to a viable minimum viable test is this:

-

- First, write the simplest possible test case for that one specific module.

We should replace any external modules with simple stubs (or simulations) that permit a single test case to be executed and provide minimal return value. We can do this by capturing all inputs that the module may need within the test script itself.

- Then we can write a set of assertions that verify the test case properly executes the module under test.

When moving from simple unit testing to integration type testing, we can replace the stubs with the actual function. Tools, such as Cantata, can help provide inputs and checking at the interface points… giving you a migration path between isolation testing and integration testing.

Automatic Test Generation

Automatic Test Generation is useful here because it can produce test cases which are relatively targeted and can help ensure you have considered branches and loops.

Automatic test case generation is a LOT cheaper than manual generation.

Cantata is a fully featured test tool that automates unit testing and builds the test environment.

Automatic generation of the test harness and stub code gives you a rapid way to begin testing with your minimum viable tests.

The confidence gained from knowing that you can execute a simple unit test isolated from the complete system is valuable. It not only provides the tester a foundation upon which to build, but also a route to debug any failures.

Metrics for performance, progress monitoring and estimation

Plan the testing aspects as precisely as you would the entire project. An important part of this plan is defining target metrics. For example, how many undetected errors, of which category, can the software under test have at delivery? The type and extent of testing will depend on these figures.

Code Metrics are measures taken automatically from source code. For example, the number of independent paths. If we know from previous experience how long it takes to test, on average, per path, then you can estimate the time to test the complete application.

To help gather the metrics using suitable tools is vital.

Using Analysis of code coverage information, extracted from running the test suite as we build it up, it is possible to refine the initial estimates and monitor the progress of the testing process.

However, beware that as you approach high levels of code coverage, say 90+%, the testing effort can increase exponentially. Difficult to test and complex parts of the application are often hiding in the final 10%.

Evidence Reporting & Collaboration

Project Managers can find value in centralised reporting, and it helps individuals to collaborate.

Data could be aggregated for software modules, showing current code test status and code coverage metrics with a history… build by build.

This can help to identify trends and plan actions. Managers can get a complete picture across all test runs.

Running tests

This is a sample build pipeline from a CI process.

After we have designed suitable test cases, we can execute them. Running the tests can be manual, semi-automatic or fully automatic. The level of automation depends on two factors: the liability for software errors and the repetition rate of the tests.

For security-related applications, the preference should always be to run automated testing.

Well-designed test scripts allow repeatability of tests.

In DevOps environments, the gold standard is to execute a complete set of automated tests on each code modification. This allows for rapid feedback to developers and the confidence that the code is always in a tested and ‘ready-to-release’ state.

Outside of DevOps, test automation allows for easy regression testing. This allows developers to ensure that added functionality does not compromise previous testing effort.

We should make an investment in a suitable test automation framework upfront to save effort throughout the duration of the project.

So that brings us to the end of our list of important points to consider when putting in place a process with testing at the core.

Remember, a well-designed process results in:

Shorter project time

Better Quality

And Lower cost

Don’t worry about having the perfect system. It won’t be. The goal isn’t perfection; it’s improving as you understand yourself, and particularly your team, better throughout the process.

Takeaways

Test earlier

Experience has shown that a conscientious approach to unit testing will detect many bugs at a stage of the software development, where we can correct them economically.

Iterative testing

Be humble about what your unit tests can achieve, unless you have extensive requirements documentation for the unit under test, the testing phase will be iterative and exploratory.

Take your time

It doesn’t have to be perfect — but it helps to be close.